Saturday, October 9, 2010

CSS vs Table Positioning

So, what's wrong with using tables for layout?

Well, first, let's look at it from the user's perspective..

Using tables creates a LOT of extra HTML on the page. This adds to the overall size of the page thus increasing the overall download time of the page. Remember that a large portion of your visitors are still on dial-up and if the page takes over 8 seconds to load, you can generally count on losing up to half of your visitors!

Along the same lines as above. One of the most common uses of tables is to place the entire page in a table to constrain the overall width of the page. This means that everything inside that table must load BEFORE the table will be displayed. This leaves your visitors staring at a blank page for 2,4,6 or even 8 seconds or more before it finally appears. Talk about a lot of lost visitors and sales!

Ok...now let's look at it from a search engine optimization perspective

Again, table layouts require a LOT of extra HTML. This means that the engines must wade through a huge amount of HTML to get to the actual content of the page. Generally speaking, the better the text to code ratio is on your page, the better it will rank. In other words, the more content and the less HTML the better. Have you ever noticed when looking through the SERPs that some of the simplest webpages tend to rank the best? This is because those pages have almost no HTML in them. It's almost completely text. The simpler the page, the better because the engines are able to understand it easier.

Yet another reason is an extension of the first. The more HTML code you have on a page, the more opportunity you have for errors to creep into your code that will keep the engines from properly indexing your page. The algorithms needed to properly extract the actual content from a webpage are pretty amazing and forgiving, but every now and then, a seemingly simple error will cause and engine to improperly index a page. The last thing you want is for a simple error to possibly be costing you thousands of dollars due to an engines inability to properly index the page.

Both of the above examples boil down to one simple thing...understanding. The whole concept of SEO is to optimize a page so that the engines can better understand what it is about. The simpler the page (meaning less HTML and more content), the easier it is for an engine to understand what it is about. To me, this above all else, is the ultimate reason to seriously consider using CSS for layout in place of tables.

Examples

So far, I have given you reasons why tables are bad for SEO, but I haven't given you any examples showing the difference between tables and CSS so now I'm going to. After all, a picture is worth a thousand words right?

To illustrate this, you’ll need to visit some examples I found at

http://www.hotdesign.com/seybold/index.html

I would recommend also reading the entire section at the above URL when you have a chance as it is short and worth the read.

Ok..let's look at a normal enough looking table layout by visiting the URL below:

http://www.hotdesign.com/seybold/15inteadofthis.html

Ok...that looks nice enough. Well formatted, simple, clean. Right??

Ok...let's look at that same table again with it's cells outlined. Also, be sure to view the source code for the below page after viewing it.

http://www.hotdesign.com/seybold/16nasty.html

Whoa. What the heck happened to our pretty...clean..table? It seems it wasn't quite as clean and crisp as it looked on the surface.

That's the problem with most table layouts. While they may look good on the surface, the code to produce them is quite often atrocious.

Now...let's look at a different table layout. This is still an actual table, but it is making use of CSS for the layout within the table.

http://www.hotdesign.com/seybold/17dothis.html

Wow...much better huh? It's still a table, but by using CSS to tell the browser how to render everything we shrunk the code down to almost nothing.

This is just one example, but there are many more. I recently took a site that was being launched by a client. It was a beautiful site, but it was done using tables for everything. In total 95% of the code on the homepage was markup! I had him get the site redesigned using CSS-P (css positioning). When it was redesigned, about 80% of the page was content with only 20% being markup.

Which do you think the engines would prefer??

It takes some time, but it's worth it. Just think back to when you first were learning how to code tables. It was confusing wasn't it? Now, it's probably second nature. CSS is the same way. Once you get the hang of it, you will wonder how you every got along with out it.

In addition to the reduction in on-page coding, how would you like to be able to change the look and feel of every page on your site by changing on file? You simply can't do that with normal table designs. With CSS, you can do sitewide changes by changing one stylesheet.

I am still in the process of going through my older sites and recoding them, but all of my new sites are CSS driven. It just makes things easier in all aspects. They may not be entirely styled by CSS, but the vast majority of styling is done. Tables have their place and are still useful, but by using CSS to style the tables (not to mention styling the rest of your pages) you will find you have a MUCH better text to code ratio.

If you are interested in learning more about CSS, there are a number of books you can check out. Probably the most well respected is "Cascading Style Sheets: The Definitive Guide" by Eric Meyer.

You can also check out http://www.w3.org/TR/REC-CSS2/ if you prefer to get your information straight from the source. The above URL is for the official specs on CSS2.

Personally, I'd recommend the Eric Meyer book initially to help you develop your understanding for CSS then moving to the actual specs.

By John Buchanan

Sunday, August 29, 2010

Google's Realtime Search Results Homepage

Last Friday, I was informed that the Google Realtime has finally has its own homepage where they have added new tools like the "conversation view".

Last Friday, I was informed that the Google Realtime has finally has its own homepage where they have added new tools like the "conversation view".

And google alerts from Twitter, Facebook and Myspace were added. You can limit your search results by location to find out what people think about the subject in a certain place. You can use geographic data to look for updates and news near you, and the surrounding area. So if you travel to UAE, you can view tweets from Dubai get ideas for the activities take place right where are you.

Try it yourself here...http://www.google.com/realtime

Saturday, August 21, 2010

Google's 1st Page Results Dominated by One Domain

Google said:

Today we’ve launched a change to our ranking algorithm that will make it much easier for users to find a large number of results from a single site. For queries that indicate a strong user interest in a particular domain.Here is an example:

You will notice and if you search for the term "apple", almost all of the search results you will get are from the Apple website where usually it only shows either 1 or 2 but now you will see more than 5.

Tuesday, August 17, 2010

Using Capital Letters in URL's

It depends on the reflection of the operating system installed on your server. Linux is case sensitive, while Windows is not. Suppose you have decided to promote the version with a capital letter (domainname.com/Your-Page). Do you think it looks better and easier to remember.

It depends on the reflection of the operating system installed on your server. Linux is case sensitive, while Windows is not. Suppose you have decided to promote the version with a capital letter (domainname.com/Your-Page). Do you think it looks better and easier to remember.So how can we solve these problems? Here is my solutions and recommendations that you can use to avoid penalties:

- Most people recommend you keep a single version. I recommend you always keep with the small version.

- If the URL with capital letters was already indexed by Google or other search engines (someone put a link to your site or you have changed the CMS, and he made some changes), use 301 redirects to redirect users and search robots to the right place.

Choosing Your Keywords

- Research has found that 31% of people enter 2 word phrases into search engines, 25% of all users look for 3 word combinations and only about 19% of them try their luck with only a single word.

- Do not choose a keyword to optimize your site for that you don't have the slightest chance of ranking good with because of the fierce competition.

- Do not choose a keyword that nobody looks for.

- Do not choose a keyword that does not relate strongly enough to your content.

- Only use generally popular keywords if you do not need targeted traffic.

- Do not use words that may get your site filtered or banned from search engines.

- Do not use images with filenames or ALT tags ( Alt attributes of IMG tag ) that may get your site filtered or banned from search engines.

- Only use dynamic pages when the functionality demands it.Use lots of relevant content, well laid out into separate pages.

- For best results optimize one page for one keyword.

- Do not post half-finished sites.

Thursday, July 22, 2010

How Long Will It Take For Rankings?

Niche Industry

A niche industry is represented by phrases that are relatively specific, such as "widgets Bellingham". While they don't require a geographic modifier, phrases focused on a very specific area often are consideredniche. Phrases used for a niche site will also often return less than 100,000 results in a Google search. Thetop 10 ranking sites will also often have less than 100 inbound links each.

Timeline:

- Brand New Site: Possibly as little as a few months

- Established Site: Potentially it could literally be over night, but most likely around 6 weeks.

Medium Industry terms are slightly more general, but still include some kind of modifier, such as a state or color; "Washington Widgets" or "Blue Widgets". These phrases often represent no more than a few million results in a typical Google search with the top 10 ranking sites having between 100-1,000 inbound links.

Timeline:

- Brand New Site: 6 months to a year

- Established Site: 2-4 months

These pages are those with phrases that are rather broad and seldom have any modifiers, such as simply"widgets". You will often find tens or even hundreds of millions of competing pages in Google for yourtarget phrase. Often the links required for the top 10 will be in the thousands, or tens of thousands(sometimes even in the millions).

Timeline:

- Brand New Site: Anywhere from 1 to 5 years

- Established Site: Could be as long as a year or more

For more established sites, rankings tend to come much more quickly. One significant factor in

determining time is links. If your established site has lots, but the site itself is simply lacking fundamental SEO or proper navigation, then you can sometimes see results rather quickly. If you have no links and need to build them, it significantly increases the wait time. Even for an established site, achieving links in a competitive industry can still take some time.

Rankings Are Taking Forever

There are a number of reasons why your site may not achieve results. If you find that your campaign has been going on for a long time and you have seen no movement what-so-ever, it is possible that one of the following is hindering your efforts.

Spamming & Penalties

In some cases your site may take forever to achieve rankings, or the rankings may not come at all. If yoursite has been previously penalized for spamming, you absolutely must clean up all traces of the past dirtiness. Once the site is entirely cleaned up, then you can apply for re-inclusion. This is certainly no assurance that Google will ever pay your site any attention again, but it's the first step to the land of maybe.

Duplicate Content

If your site has utilized mass amounts of duplicate content, chances are you will not ever see rankings until you replace it all with something original and meaningful. There is no "duplicate content" penalty per-se, but you are essentially penalizing yourself if you copy content. Google tends to look at the first instance it finds online for a piece of content as the official source (not always the case). If you copy content that is already out there and indexed by Google, they will discount your content as it is already indexed somewhere else, and your site or page will simply not get any rankings for it - and rightfully so.

Links

If your site has no links, you probably will not get any rankings, even after you are fully indexed. This is not always the case, I have seen sites rank well for various phrases with zero inbound links - but it is rare, and should not be relied on. On the flip side of this, let's say your site has thousands of links, but they are from free for all sites, link farms, or "bad neighborhoods", and so on - they won't help you. These links won't necessarily hurt you, but will be essentially ignored. You need quality, relevant links.

Competition

You just may be out of your league. If you have a small operation, and are competing for a major ultra competitive term, chances are you won't ever see the light of day. Not to say it is not possible, but if you are competing in a well established industry where literally 10's of thousands of links are required, and your target phrase is experiencing millions of searches a month, you need to weigh your targets. Chances are your keywords need to be re-evaluated as your chances of success are slim.

Wednesday, March 24, 2010

White Hat SEO Fundamentals

1. Keyword Selection

2. Three Phrases Per Page

3. Page Title Tag

4. Page Meta Keywords field

5. Page Meta Description

6. Web URL

7. H1,H2,H3 tags

8. Bold, Strong call-out

9. Bullet Points

10. Navigation Link Titles For Main Pages

11. Navigation Link Titles for Secondary Pages

12. Hyperlink (No Followed)

13. Plain Text in content

14. Image Alternate Attributes

15. Image Caption

16. Charts

17. PDF Documents

18. Cross Page Linking

19. Footer Text

20. Out-bound links

21. robots.txt file

22. Sitemap.xml file creation

23. Search Engine and Directory Submission

24. In-Bound Links

__________________________________________

1. KEYWORD SELECTION

Before I begin any SEO initiative, I do extensive research to determine the best key word phrases for use on a particular site.This is such a complex process and requires serious understanding due to the fact that it’s the single most important aspect of the optimization process that I have recently devoted an entire advanced keyword selection lesson blog article to it.

2. Three Phrases Per Page

While you can get found for many longer phrases on any given page or more phrases than your top three, that requires having your site powerfully optimized and having many links back to your site.

At the same time, size and repetition constraints for various aspects below mean that you can typically only get away with three or perhaps four keyword phrases that you focus on for any single page. Trying to optimize for more phrases is more often than not a losing battle – much better to focus on high quality and let the natural process give you bonuses of additional phrase recognition.

_____________________________________________________

PAGE HEADERS:

Page Headers are not part of the main content however they set the stage for how a web page is processed. For Search Engines, everything starts with page headers being properly seeded.

_____________________________________________________

3. Page Title Tag

This is the single most important and vital aspect of a web page for Google – all other attributes below are compared first against the page title. If the page title is not properly seeded, Google is essentially hindered from knowing exactly what the page is about.

Company Name | Keyword Phrase | Keyword Phrase Keyword Phrase

Title Length – 60 – 105 characters including spaces (Google only displays up to the first 60 to 65 to people doing search, depending on word cut-off) – so the most important keyword phrases should be up front.

Never repeat any single keyword more than three times – with creativity you can often combine keyword phrases for the page title but only if you do a lot of on-page work to bolster it – otherwise have the phrases in sequential order.

_____________________________________________________

4. Page Meta Keywords field

This field is not used by Google at all – it is, however, used by Yahoo and MSN so it’s worth it to seed this field with your page’s keyword phrases.

meta name=”keywords” content=”keyword phrase one,keyword phrase two,keyword phrase three”

Never repeat any single keyword more than three times

_____________________________________________________

5. Page Meta Description

This page is not used to determine your page ranking, it is, however, presented to people doing a search – but if Google thinks it’s not really describing the page content, Google will ignore it and grab a snippet of text from your page, not always choosing anything that makes sense!

meta name=”description” content=”Marys boutique offers the highest quality designer handbags online from such designers as Gucci, Dior and more…”

Only the first 150 characters are displayed by some search engines though some others allow up to 200 characters including spaces.

_____________________________________________________

6. Web URL

While the web URL is not critical, it is becoming more important as more web sites come online and more content becomes indexed. It used to be that you could get away with URLs that could be interpreted by programming scripts but didn’t make sense to site visitors. While you can still do that, having a well formatted URL that incorporates a limited amount of keyword seeding ensures one more match to the page title’s phrases.

So for example a well formatted URL might look like:

www.PrimaryKeyword.com/2ndKeyword/3rdKeyword/

So if you sell designer handbags, and one of those designers is Alexis Hudson, then the url might be

http://www.stefanibags.com/alexis-hudson-handbags

Or if you are a law firm specializing in mesothelioma, and the primary cause is due to asbestos exposure, you might have a page on your site with the url:

http://www.mesothelioma-attorney.com/mesothelioma/asbestos-exposure

_____________________________________________________

ON PAGE CONTENT:

This is a process that requires finesse because whatever you do here must be weight against maintaining quality content and readability.

_____________________________________________________

7. H1,H2,H3 tags

Most Important

Second Most Important

Third Most Important

Having text on your page wrapped in header tags like H1 where that text includes the page’s keyword phrases tells Google – this page’s emphasis really is about these phrases… It’s best to do this in an opening sentence or paragraph at the very top of the page but if you do your other seeding work well, the H1 or H2 or H3 text can be anywhere in the content area and still be of value.

_____________________________________________________

8. Bold, Strong call-out

Keyword

Keyword

Having sections of content highlighted by a few keyword seeded words in bold above each section helps the visitor visually see breaks in the content and quickly scan what they want to read. Using Bold or Strong emphasis tells Google “important text”…

You can also use bold, strong, and italics on keywords within the paragraphs as well.

_____________________________________________________

9. Bullet Points

Text with keyword or phrase

Text with 2nd keyword or phrase

Text with 3rd keyword or phrase

_____________________________________________________

10. Navigation Link Titles For Main Pages

Hyperlinks can have a title attribute, yet another opportunity to seed.

Keywords in Anchor Text

_____________________________________________________

11. Navigation Link Titles for Secondary Pages

Same syntax as main pages but slightly less weight is given to them

_____________________________________________________

12. Hyperlink (No Followed to tell search engines not to follow the link)

Sometimes you don’t want Google to follow a link in your site – perhaps you don’t want a page or section of your site indexed at Google at all, or perhaps you point to sites that Google frowns upon and you don’t want Google to penalize you for those.

Or perhaps you have a blog and visitors can leave comments – if they do, they may leave links in their comments back to their sites or to 3rd party sites – this is a very common way people try to spam Google. If this is something you want to avoid, you may consider using the “nofollow” attribute:

Anchor Text

_____________________________________________________

13. Plain Text in content

Needs to be embedded in natural sounding paragraphs – write your content to speak to your site visitor. (If someone reading your pages is not motivated to contact you or buy your products, then all the SEO in the world is useless!)

While you are doing this, include some entries that have an entire keyword phrase in sequence and other entries that have individual keywords split out of phrases. Having too many instances of keywords both makes the content unintelligible and it’s a red flag to the search engines… So this is a balancing act.

_____________________________________________________

14. Image Alternate Attributes

(having images on the page adds weight because Google sees this as richer content than plain text).

(For pages created in html)

img src=”keyword.jpg” alt=”Photo about keyword phrase”

(For pages created in xhtml)

img src=”keyword.jpg” alt=”About keyword phrase”

NOTE – Best Practices require the alternate attribute of every image be filled out with information that helps visually impaired people know what the image is for, regardless of whether you use these fields to also add keywords

_____________________________________________________

15. Image Caption

Just below the image you should have a short caption that includes at least part of the keyword phrase most relevant to the photo

_____________________________________________________

16. Charts

If you can legitimately have a statistical chart related to a page’s content, do so – it can be an image or a spreadsheet but if it’s an image, use the same file name, alt attribute and caption rules – if it’s a spreadsheet in HTML, you may want to embed keywords as column or row names if that makes sense, or have a caption…

_____________________________________________________

17. PDF Documents

Having a PDF document related to the page’s keyword focus linked from within the page is also a good move – but it has to be an actual PDF with copy-capable text, not a screen-capture of something that was saved as a PDF because Google has to be able to read it. The more high quality content that’s properly seeded with keywords the better. That’s why this article can be downloaded in PDF format here.

_____________________________________________________

18. Cross Page Linking

Having a paragraph in one page’s content that can make reference to the content from another page and having a keyword phrase in that paragraph link to that other page – this tells Google that important related and supporting information can be found “over there”.

_____________________________________________________

19. Footer Text

As the very last content within the page or down in the footer area, hav your page title repeated exactly as it is in the title tag. Ideally this will be H1, 2 or 3, or at the very least “strong” or “bold”.

_____________________________________________________

20. Out-bound links

Within your content, just as you have links pointing to other pages on your site, on your most important pages have links pointing out to 3rd party web sites that again relate to that paragraph’s / pages keyword phrases. Sites to point to should themselves be highly authoritative and highly positioned at Google for that phrase.

__________________________________________

21. ROBOTS.TXT FILE

Okay – so like every other “rule” to SEO, this one is something that really isn’t “100% mission critical to getting your site indexed. I include it in the top tasks however, because a properly designed, formatted and supported web site is sure to always get better results for a whole host of reasons than one that is not.

So this one is about what you want the search engines to index and what you don’t want them to. If you don’t have this file, the possibility of having something you otherwise might think is hidden from public consumption turns out not to be.

So – Each site needs to have a plain text file in that site’s root directory that instructs search engine “bots” (the automated site scanning software that scours the web to gather information for the search engines) which pages or directories on the site are off limits. As a standard rule, we do not want search engines indexing anything inside a site’s design or development assets directories, or any test or “private” pages.

Example robots.txt file:

User-agent: *

Disallow: /design

Disallow: /visitor-logs

Disallow: /about/myprivatepage.html

__________________________________________

22. SITEMAP.XML FILE CREATION

Every site is better served in having an XML based file placed at the root directory level called sitemap.xml

Unlike traditional HTML based site maps, this is a specially formatted file that once created, is then submitted through the Google webmaster program, Yahoo Site Explorer, and also through the MSN Live system. This file tells the search engines how to better index the site’s pages. This work is presently handled through our in house SEO engineer.

If you can not generate an xml version of this file, a plain text version is acceptable, however the plain text version carries less power when telling the search engines about your pages. Google Sitemaps

Some people will tell you it’s not necessary to have a sitemap.xml file. My own experience has shown that when I have one on a new site, more of that site’s pages are indexed sooner. When I have one on a site that changes regularly or where pages are added regularly, by going to Google’s Webmaster Tools page and Yahoo’s Site Explorer page, I can instantly tell them to re-index the sitemap file and I see faster results in those new pages being indexed. At the same time though, if you’ve got your site indexed, new pages and more pages will be indexed eventually.

__________________________________________

23. SEARCH ENGINE & DIRECTORY SUBMISSION

Once everything above is addressed, we need to submit the site to all the top search engines and directories. After you’ve submitted the site’s sitemap.xml file as described above, it’s very good practice to then submit it to as many other quality directories as possible. Not only does this help due to the number of people who search at those locations, it also helps toward the next step below, getting links back to your site.

The trick is that while there are some standard places every site should be submitted to (the Yahoo Directory and the Open Directory Project (DMOZ) for example), but to any web directory that either specializes in your industry (such as a Trade Association’s business directory or a Chamber of Commerce membership directory), or lists web sites for a particular region (such as a county or town specific business listing directory) if some or all of your customers are local and you have a physical store or office.

Some people will tell you that search spiders are so good that you don’t even need to submit your site – that it will be picked up anyhow. Well, my opinion is that I don’t want to wait around and hope that’s going to happen. I want to be proactive.

__________________________________________

24. LINKS BACK

One of the most important aspects of a pages ranking value at the search engines is now “how many sites link back to our site?” It’s all good and fine to create a pleasing site that is of value to site visitors, yet if no other sites on the Internet provide a link back to this site, the top search engines consider this site of little importance. As such, submitting the site, after all of the above work is done, to as many other web sites for inclusion in their “links” or “resources” section, is vital.

The more web sites that provide a link back, the better, however the ideal goal is to have as many of THOSE links come from web sites that are established, popular and/or authoritarian in nature. For example – having a link back from www.cnn.com is given more value than a link back from

www.petuniajonesjuniorhighschoolgigglespage.com

This process is still a challenge because many sites today are designed specifically to attract high ranking in the search engines, so they can then sell link listings. More often than ever before, Google is considering this a bad thing. They see sites listed at such places as potentially attempting to artificially gain ranking just by mere association. So Google now “frowns” upon paid text links.

LINKS TO AVOID

A word needs to be said about obtaining links from web sites that are or might become red-flagged from Google. This can include sites where the sole or primary purpose of the site is to charge web site owners for paid inclusion but where the site is filled with very low quality links, countless sponsored links that are clearly spam (if you see the key words repeated in text links over and over and over again without any sense of variety, that can be an obvious sign – like “online phramacy discount drugs”.

Also – what is the policy of the site? Do they have “quality submission standards”? Is there anything on the site that just looks like it’s a shady site? Sometimes this is a judgement call. My personal policy is that if you have to pay for submission, and if all they do is claim to be a link directory, to avoid the site altogether. Read more about link building at my blog post Five Link Building Strategies for SEO.

by: Alan Bleiweiss

Tuesday, March 16, 2010

Interview with Matt Cutts by Eric Enge

Interview Transcript

Eric Enge: Let's talk a little bit about the concept of crawl budget. My understanding has been that Googlebot would come to do a website knowing how many pages is was going to take that day, and then it would leave once it was done with those pages.

Matt Cutts: I'll try to talk through some of the different things to bear in mind. The first thing is that there isn't really such thing as an indexation cap. A lot of people were thinking that a domain would only get a certain number of pages indexed, and that's not really the way that it works.

"... the number of pages that we crawl is roughly proportional to your PageRank"

There is also not a hard limit on our crawl. The best way to think about it is that the number of pages that we crawl is roughly proportional to your PageRank. So if you have a lot of incoming links on your root page, we'll definitely crawl that. Then your root page may link to other pages, and those will get PageRank and we'll crawl those as well. As you get deeper and deeper in your site, however, PageRank tends to decline.

Another way to think about it is that the low PageRank pages on your site are competing against a much larger pool of pages with the same or higher PageRank. There are a large number of pages on the web that have very little or close to zero PageRank. The pages that get linked to a lot tend to get discovered and crawled quite quickly. The lower PageRank pages are likely to be crawled not quite as often.

One thing that's interesting in terms of the notion of a crawl budget is that although there are no hard limits in the crawl itself, there is the concept of host load. The host load is essentially the maximum number of simultaneous connections that a particular web server can handle. Imagine you have a web server that can only have one bot at a time. This would only allow you to fetch one page at a time, and there would be a very, very low host load, whereas some sites like Facebook, or Twitter, might have a very high host load because they can take a lot of simultaneous connections.

Your site could be on a virtual host with a lot of other web sites on the same IP address. In theory, you can run into limits on how hard we will crawl your site. If we can only take two pages from a site at any given time, and we are only crawling over a certain period of time, that can then set some sort of upper bound on how many pages we are able to fetch from that host.

Eric Enge: So you have basically two factors. One is raw PageRank, that tentatively sets how much crawling is going to be done on your site. But host load can impact it as well.

Matt Cutts: That's correct. By far, the vast majority of sites are in the first realm, where PageRank plus other factors determines how deep we'll go within a site. It is possible that host load can impact a site as well, however. That leads into the topic of duplicate content. Imagine we crawl three pages from a site, and then we discover that the two other pages were duplicates of the third page. We'll drop two out of the three pages and keep only one, and that's why it looks like it has less good content. So we might tend to not crawl quite as much from that site.

If you happen to be host load limited, and you are in the range where we have a finite number of pages that we can fetch because of your web server, then the fact that you had duplicate content and we discarded those pages meant you missed an opportunity to have other pages with good, unique quality content show up in the index.

Eric Enge: That's always been classic advice that we've given people, that the one of the costs of duplicate content is wasted crawl budget.

Matt Cutts: Yes. One idea is that if you have a certain amount of PageRank, we are only willing to crawl so much from that site. But some of those pages might get discarded, which would sort of be a waste. It can also be in the host load realm, where we are unable to fetch so many pages.

Eric Enge: Another background concept we talk about is the notion of wasted link juice. I am going to use the term PageRank, but more generically I really mean link juice, which might relate to concepts like trust and authority beyond the original PageRank concept. When you link from one page to a duplicate page, you are squandering some of your PageRank, correct?

Matt Cutts: It can work out that way. Typically, duplicate content is not the largest factor on how many pages will be crawled, but it can be a factor. My overall advice is that it helps enormously if you can fix the site architecture upfront, because then you don't have to worry as much about duplicate content issues and all the corresponding things that come along with it. You can often use 301 Redirects for duplicate URLs to merge those together into one single URL. If you are not able to do a 301 Redirect, then you can fall back on rel=canonical.

Some people can't get access to their web server to generate a 301, maybe they are on a school account, a free host, or something like that. But if they are able to fix it in the site architecture, that's preferable to patching it up afterwards with a 301 or a rel=canonical.

Eric Enge: Right, that's definitely the gold standard. Let’s say you have a page that has ten other pages it links to. If three of those pages are actually duplicates which get discarded, have you then wasted three of your votes?

(on duplicate content:) "What we try to do is merge pages, rather than dropping them completely"

Matt Cutts: Well, not necessarily. That's the sort of thing where people can run experiments. What we try to do is merge pages, rather than dropping them completely. If you link to three pages that are duplicates, a search engine might be able to realize that those three pages are duplicates and transfer the incoming link juice to those merged pages.

It doesn't necessarily have to be the case that PageRank is completely wasted. It depends on the search engine and the implementation. Given that every search engine might implement things differently, if you can do it on your site where all your links go to a single page, then that’s definitely preferable.

Eric Enge: Can you talk a little bit about Session IDs?

Matt Cutts: Don't use them. In this day and age, most people should have a pretty good idea of ways to make a site that don't require Session IDs. At this point, most software makers should be thinking about that, not just from a search engine point of view, but from a usability point of view as well. Users are more likely to click on links that look prettier, and they are more likely to remember URLs that look prettier.

If you can't avoid them, however, Google does now offer a tool to deal with Session IDs. People do still have the ability, which they’ve had in Yahoo! for a while, to say if a URL parameter should be ignored, is useless, or doesn't add value, they can rewrite it to the prettier URL. Google does offer that option, and it's nice that we do. Some other search engines do as well, but if you can get by without Session IDs, that's typically the best.

Eric Enge: Ultimately, there is a risk that it ends up being seen as duplicate content.

Matt Cutts: Yes, exactly, and search engines handle that well most of the time. The common cases are typically not a problem, but I have seen cases where multiple versions of pages would get indexed with different Session IDs. It’s always best to take care of it on your own site so you don't have to worry about how the search engines take care of it.

Eric Enge: Let's touch on affiliate programs. You are getting other people to send you traffic, and they put a parameter on the URL. You maintain the parameter throughout the whole visit to your site, which is fairly common. Is that something that search engines are pretty good at processing, or is there a real risk of perceived duplicate content there?

Matt Cutts: Duplicate content can happen. If you are operating something like a co-brand, where the only difference in the pages is a logo, then that's the sort of thing that users look at as essentially the same page. Search engines are typically pretty good about trying to merge those sorts of things together, but other scenarios certainly can cause duplicate content issues.

Eric Enge: There is some classic SEO advice out there, which says that what you really should do is let them put their parameter on their URL, but when users click on that link to get to your site, you 301 redirect them to the page without that parameter, and drop the parameter in a cookie.

Matt Cutts: People can do that. The same sort of thing that can also work for landing pages for ads, for example. You might consider making your affiliate landing pages ads in a separate URL directory, which you could then block in robots.txt, for example. Much like ads, affiliate links are typically intended for actual users, not for search engines. That way it’s very traceable, and you don't have to worry about affiliate codes getting leaked or causing duplicate content issues if those pages never get crawled in the first place.

Eric Enge: If Googlebot sees an affiliate link out there, does it treat that link as an endorsement or an ad?

(on affiliate links:) "... we usually would not count those as an endorsement"

Matt Cutts: Typically, we want to handle those sorts of links appropriately. A lot of the time, that means that the link is essentially driving people for money, so we usually would not count those as an endorsement.

Eric Enge: Let's talk a bit about faceted navigation. For example, on Zappos, people can buy shoes by size, by color, by brand, and the same product is listed 20 different ways, so that can be very challenging. What are your thoughts on those kinds of scenarios?

Matt Cutts: Faceted navigation in general can be tricky. Some regular users don't always handle it well, and they get a little lost on where they are. There might be many ways to navigate through a piece of content, but you want each page of content to have a single URL if you can help it. There are many different ways to slice and dice data. If you can decide on your own what you think are the most important ways to get to a particular piece of content, then you can actually look at trying to have some sort of hierarchy in the URL parameters.

Category would be the first parameter, and price the second, for example. Even if someone is navigating via price, and then clicks on a category, you can make it so that that hierarchy of how you think things should be categorized is enforced in the URL parameters in terms of the position.

This way, the most important category is first, and the next most important is second. That sort of thing can help some search engines discover the content a little better, because they might be able to realize they will still get useful or similar content if they remove this last parameter. In general, faceted navigation is a tough problem, because you are creating a lot of different ways that someone can find a page. You might have a lot of intermediate paths before they get to the pay load.

If it's possible to keep things relatively shallow in terms of intermediate pages, that can be a good practice. If someone has to click through seven layers of faceted navigation to find a single product, they might lose their patience. It is also weird on the search engine side if we have to click through seven or eight layers of intermediate faceted navigation before we get to a product. In some sense, that's a lot of clicks, and a lot of PageRank that is used up on these intermediate pages with no specific products that people can buy. Each of those clicks is an opportunity for a small percentage of the PageRank to dissipate.

While faceted navigation can be good for some users, if you can decide on your own hierarchy how you would categorize the pages, and try to make sure that the faceted navigation is relatively shallow, those can both be good practices to help search engines discover the actual products a little better.

Eric Enge: If you have pages that have basically the same products, or substantially similar products with different sort orders, is that a good application for the canonical tag?

Matt Cutts: It can be, or you could imagine reordering the position of the parameters on your own. In general, the canonical tags idea is designed to allow you to tell search engines that two pages of content are essentially the same. You might not want to necessarily make a distinction between a black version of a product and a red version of a product if you have 11 different colors for that product. You might want to just have the one default product page, which would then be smart enough to have a dropdown or something like that. Showing minor variations within a product and having a rel=canonical to go on all of those is a fine way to use the rel=canonical tag.

Eric Enge: Let’s talk a little bit about the impact on PageRank, crawling and indexing of some of the basic tools out there. Let’s start with our favorite 301 Redirects.

Matt Cutts: Typically, the 301 Redirect would pass PageRank. It can be a very useful tool to migrate between pages on a site, or even migrate between sites. Lots of people use it, and it seems to work relatively well, as its effects go into place pretty quickly. I used it myself when I tried going from mattcutts.com to dullest.com, and that transition went perfectly well. My own testing has shown that it's been pretty successful. In fact, if you do site:dullest.com right now, I don't get any pages. All the pages have migrated from dullest.com over to mattcutts.com. At least for me, the 301 does work the way that I would expect it to. All the pages of interest make it over to the new site if you are doing a page by page migration, so it can be a powerful tool in your arsenal.

Eric Enge: Let’s say you move from one domain to another and you write yourself a nice little statement that basically instructs the search engine and, any user agent on how to remap from one domain to the other. In a scenario like this, is there some loss in PageRank that can take place simply because the user who originally implemented a link to the site didn't link to it on the new domain?

Matt Cutts: That's a good question, and I am not 100 percent sure about the answer. I can certainly see how there could be some loss of PageRank. I am not 100 percent sure whether the crawling and indexing team has implemented that sort of natural PageRank decay, so I will have to go and check on that specific case. (Note: in a follow on email, Matt confirmed that this is in fact the case. There is some loss of PR through a 301).

Eric Enge: Let’s briefly talk about 302 Redirects.

Matt Cutts: 302s are intended to be temporary. If you are only going to put something in place for a little amount of time, then 302s are perfectly appropriate to use. Typically, they wouldn't flow PageRank, but they can also be very useful. If a site is doing something for just a small amount of time, 302s can be a perfect case for that sort of situation.

Eric Enge: How about server side redirects that return no HTTP Status Code or a 200 Status Code?

Matt Cutts: If we just see the 200, we would assume that the content that was returned was at the URL address that we asked for. If your web server is doing some strange rewriting on the server side, we wouldn't know about it. All we would know is we try to request the old URL, we would get some content, and we would index that content. We would index it under the original URL’s location.

Eric Enge: So it’s essentially like a 302?

Matt Cutts: No, not really. You are essentially fiddling with things on the web server to return a different page's content for a page that we asked for. As far as we are concerned, we saw a link, we follow that link to this page and we asked for this page. You returned us content, and we indexed that content at that URL.

People can always do dynamic stuff on the server side. You could imagine a CMS that was implemented within the web server would not do 301s and 302s, but it would get pretty complex and it would be pretty error prone.

Eric Enge: Can you give a brief overview of the canonical tag?

Matt Cutts: There are a couple of things to remember here. If you can reduce your duplicate content using site architecture, that's preferable. The pages you combine don't have to be complete duplicates, but they really should be conceptual duplicates of the same product, or things that are closely related. People can now do cross-domain rel=canonical, which we announced last December.

For example, I could put up a rel=canonical for my old school account to point to my mattcutts.com. That can be a fine way to use rel=canonical if you can't get access to the web server to add redirects in any way. Most people, however, use it for duplicate content to make sure that the canonical version of a page gets indexed, rather than some other version of a page that you didn't want to get indexed.

Eric Enge: So if somebody links to a page that has a canonical tag on it, does it treat that essentially like a 301 to the canonical version of the page?

Matt Cutts: Yes, to call it a poor man's 301 is not a bad way to think about it. If your web server can do a 301 directly, you can just implement that, but if you don't have the ability to access the web server or it's too much trouble to setup a 301, then you can use a rel=canonical.

It’s totally fine for a page to link to itself with rel=canonical, and it's also totally fine, at least with Google, to have rel=canonical on every page on your site. People think it has to be used very sparingly, but that's not the case. We specifically asked ourselves about a situation where every page on the site has rel=canonical. As long as you take care in making those where they point to the correct pages, then that should be no problem at all.

Eric Enge: I think I've heard you say in the past that it’s a little strong to call a canonical tag a directive. You call it "a hint" essentially.

Matt Cutts: Yes. Typically, the crawl team wants to consider these things hints, and the vast majority of the time we'll take it on advisement and act on that. If you call it a directive, then you sort of feel an obligation to abide by that, but the crawling and indexing team wants to reserve the ultimate right to determine if the site owner is accidentally shooting themselves in the foot and not listen to the rel=canonical tag. The vast majority of the time, people should see the effects of the rel=canonical tag. If we can tell they probably didn't mean to do that, we may ignore it.

Eric Enge: The Webmaster Tools "ignore parameters" is effectively another way of doing the same thing as a canonical tag.

Matt Cutts: Yes, it's essentially like that. It’s nice because robots.txt can be a little bit blunt, because if you block a page from being crawled and we don't fetch it, we can't see it as a duplicative version of another page. But if you tell us in the webmaster console which parameters on URLs are not needed, then we can benefit from that.

Eric Enge: Let's talk a bit about KML files. Is it appropriate to put these pages in robots.txt to save crawl budget?

"... if you are trying to block something out from robots.txt, often times we'll still see that URL and keep a reference to it in our index. So it doesn't necessarily save your crawl budget"

Matt Cutts: Typically, I wouldn't recommend that. The best advice coming from the crawler and indexing team right now is to let Google crawl the pages on a site that you care about, and we will try to de-duplicate them. You can try to fix that in advance with good site architecture or 301s, but if you are trying to block something out from robots.txt, often times we'll still see that URL and keep a reference to it in our index. So it doesn't necessarily save your crawl budget. It is kind of interesting because Google will try to crawl lots of different pages, even with non-HTML extensions, and in fact, Google will crawl KML files as well. ,

What we would typically recommend is to just go ahead and let the Googlebot crawl those pages and then de-duplicate them on our end. Or, if you have the ability, you can use site architecture to fix any duplication issues in advance. If your site is 50 percent KML files or you have a disproportionately large number of fonts and you really don't want any of them crawled, you can certainly use robots.txt. Robots.txt does allow a wildcard within individual directives, so you can block them. For most sites that are typically almost all HTML with just a few additional pages or different additional file types, I would recommend letting Googlebot crawl those.

Eric Enge: You would avoid the machinations involved if it's a small percentage of the actual pages.

Matt Cutts: Right.

Eric Enge: Does Google do HEAD requests to determine the content type?

Matt Cutts: For people who don't know, there are different ways to try to fetch and check on content. If you do a GET, then you are requesting for the web server to return that content. If you do a HEAD request, then you are asking the web server whether that content has changed or not. The web server can just respond more or less with a yes or no, and it doesn't actually have to send the content. At first glance, you might think that the HEAD request is a great way for web search engines to crawl the web and only fetch the pages that have changed since the last time they crawled.

It turns out, however, most web servers end up doing almost as much work to figure out whether a page has changed or not when you do a HEAD request. In our tests, we found it's actually more efficient to go ahead and do a GET almost all the time, rather than running a HEAD against a particular page. There are some things that we will run a HEAD for. For example, our image crawl may use HEAD requests because images might be much, much larger in content than web pages.

In terms of crawling the web and text content and HTML, we'll typically just use a GET and not run a HEAD query first. We still use things like If-Modified-Since, where the web server can tell us if the page has changed or not. There are still smart ways that you can crawl the web, but HEAD requests have not actually saved that much bandwidth in terms of crawling HTML content, although we do use it for image content.

Eric Enge: And presumably you could use that with video content as well, correct?

Matt Cutts: Right, but I'd have to check on that.

Eric Enge: To expand on the faceted navigation discussion, we have worked on a site that has a very complex faceted navigation scheme. It's actually a good user experience. They have seen excellent increases in conversion after implementing this on their site. It has resulted in much better revenue per visitor which is a good signal.

Matt Cutts: Absolutely.

Eric Enge: On the other hand what they've seen is that the number of indexed pages has dropped significantly on the site. And presumably, it's because these various flavors of the pages which are for the most part just listing products in different orders essentially.

The pages are not text rich; there isn't a lot for their crawler to chew on, so that looks like poor quality pages or duplicates. What's the best way for someone like that to deal with this. Should they prevent crawling of those pages?

Matt Cutts: In some sense, faceted navigation can almost look like a bit of a maze to search engines, because you can have so many different ways of slicing and dicing the data. If search engines can't get through the maze to the actual products on the other side, then sometimes that can be tricky in terms of the algorithm determining the value add of individual pages.

Going back to some of the earlier advice I gave, one thing to think about is if you can limit the number of lenses or facets by which you can view the data that can be a little bit helpful and sometimes reduce confusion. That’s something you can certainly look at. If there is a default category, hierarchy, or way that you think is the most efficient or most user-friendly to navigate through, it may be worth trying.

You could imagine trying rel=canonical on those faceted navigation pages to pull you back to the standard way of going down through faceted navigation. That's the sort of thing where you probably want to try it as an experiment to see how well it worked. I could imagine that it could help unify a lot of those faceted pages down into one path to a lot of different products, but you would need to see how users would respond to that.

Eric Enge: So if Googlebot comes to a site and it sees 70 percent of the pages are being redirected or have rel=canonical to other pages, what happens? When you have a scenario like that, do you reduce the amount of time you spend crawling those pages because you've seen that tag there before?

Matt Cutts: It’s not so much that rel=canonical would affect that, but our algorithms are trying to crawl a site to ascertain the usefulness and value of those pages. If there are a large number of pages that we consider low value, then we might not crawl quite as many pages from that site, but that is independent of rel=canonical. That would happen with just the regular faceted navigation if all we see are links and more links.

It really is the sort of thing where individual sites might want to experiment with different approaches. I don't think that there is necessarily anything wrong with using rel=canonical to try to push the search engine towards a default path of navigating through the different facets or different categories. You are just trying to take this faceted experience and reduce the amount of multiplied paths and pull it back towards a more logical path structure.

Eric Enge: It does sound like there is a remaining downside here, that the crawler is going to spend a lot of it's time on these pages that aren't intended for indexing.

Matt Cutts: Yes, that’s true. If you think about it, every level or every different way that you can slice and dice that data is another dimension in which the crawler can crawl an entire product catalogue times that dimension number of pages, and those pages might not even have the actual product. You might still be navigating down through city, state, profession, color, price, or whatever. You really want to have most of your pages have actual products with lots of text on them. If your navigation is overly complex, there is less material for search engines to find and index and return in response to user's queries.

A lot of the time, faceted navigation can be like these layers in between the users or search engines, and the actual products. It’s just layers and layers of lots of different multiplicative pages that don't really get you straight to the content. That can be difficult from a search engine or user perspective sometimes.

Eric Enge: What about PageRank Sculpting? Should publishers consider using encoded Javascript redirects of links, or implementing links inside iframes?

Matt Cutts: My advice on that remains roughly the same as the advice on the original ideas of PageRank Sculpting. Even before we talked about how PageRank Sculpting was not the most efficient way to try to guide Googlebot around within a site, we said that PageRank Sculpting was not the best use of your time because that time could be better spent on getting more links to and creating better content on your site.

PageRank Sculpting is taking the PageRank that you already have and trying to guide it to different pages that you think will be more effective, and there are much better ways to do that. If you have a product that gives you great conversions and a fantastic profit margin, you can put that right at the root of your site front and center. A lot of PageRank will flow through that link to that particular product page.

Site architecture, how you make links and structure appear on a page in a way to get the most people to the products that you want them to see, is really a better way to approach it then trying to do individual sculpting of PageRank on links. If you can get your site architecture to focus PageRank on the most important pages or the pages that generate the best profit margins, that is a much better way of directly sculpting the PageRank then trying to use an iFrame or encoded JavaScript.

I feel like if you can get site architecture straight first, then you'll have less to do, or no need to even think about PageRank Sculpting. Just to go beyond that and be totally clear, people are welcome to do whatever they want on their own sites, but in my experience, PageRank Sculpting has not been the best use of peoples' time.

Eric Enge: I was just giving an example of a site with a faceted navigation problem, and as I mentioned, they were seeing a decline in the number of index pages. They just want to find a way to get Googlebot to not spend time on pages that they don't want getting in the index. What are your thoughts on this?

Matt Cutts: A good example might be to start with your ten best selling products, put those on the front page, and then on those product pages you could have links to your next ten or hundred best selling products. Each product could have ten links, and each of those ten links could point to ten other products that are selling relatively well. Think about sites like YouTube or Amazon; they do an amazing job of driving users to related pages and related products that they might want to buy anyway.

If you show up on one of those pages and you see something that looks really good, you click on that and then from there you see five more useful and related products. You are immediately driving both users and search engines straight to your important products rather than starting to dive into a deep faceted navigation. It is the sort of thing where sites should experiment and find out what works best for them.

There are ways to do your site architecture, rather than sculpting the PageRank, where you are getting products that you think will sell the best or are most important front and center. If those are above the fold things, people are very likely to click on them. You can distribute that PageRank very carefully between related products, and use related links straight to your product pages rather than into your navigation. I think there are ways to do that without necessarily going towards trying to sculpt PageRank.

Eric Enge: If someone did choose to do that (JavaScript encoded links or use an iFrame), would that be viewed as a spammy activity or just potentially a waste of their time?

"the original changes to NoFollow to make PageRank Sculpting less effective are at least partly motivated because the search quality people involved wanted to see the same or similar linkage for users as for search engines"

Matt Cutts: I am not sure that it would be viewed as a spammy activity, but the original changes to NoFollow to make PageRank Sculpting less effective are at least partly motivated because the search quality people involved wanted to see the same or similar linkage for users as for search engines. In general, I think you want your users to be going where the search engines go, and that you want the search engines to be going where the users go.

In some sense, I think PageRank Sculpting is trying to diverge from that. If you are thinking about taking that step, you should ask yourself why you are trying to diverge and send bots in a different location than users. In my experience, we typically want our bots to be seen on the same pages and basically traveling in the same direction as search engine users. I could imagine down the road if iFrames or weird JavaScript got to be so pervasive that it would affect the search quality experience, we might make changes on how PageRank would flow through those types of links.

It's not that we think of them as spammy necessarily, so much as we want the links and the pages that search engines find to be in the same neighborhood and of the same quality as the links and pages that users will find when they visit the site.

Eric Enge: What about PDF files?

Matt Cutts: We absolutely do process PDF files. I am not going to talk about whether links in PDF files pass PageRank. But, a good way to think about PDFs is that they are kind of like Flash in that they aren't a file format that's inherent and native to the web, but they can be very useful. In the same way that we try to find useful content within a Flash file, we try to find the useful content within a PDF file. At the same time, users don't always like being sent to a PDF. If you can make your content in a Web-Native format, such as pure HTML, that's often a little more useful to users than just a pure PDF file.

Eric Enge: There is the classic case of somebody making a document that they don't want to have edited, but they do want to allow people to distribute and use, such as an eBook.

Matt Cutts: I don't believe we can index password protected PDF files. Also, some PDF files are image based. There are, however, some situations in which we can actually run OCR on a PDF.

Eric Enge: What if you have a text-based PDF file rather than an image-based one?

Matt Cutts: People can certainly use that if they want to, but typically I think of PDF files as the last thing that people encounter, and users find it to be a little more work to open them. People need to be mindful of how that can affect the user experience.

Eric Enge: With the new JavaScript processing, what actually are you doing there? Are you actually executing JavaScript?

Matt Cutts: For a while, we were scanning within JavaScript, and we were looking for links. Google has gotten smarter about JavaScript and can execute some JavaScript. I wouldn't say that we execute all JavaScript, so there are some conditions in which we don't execute JavaScript. Certainly there are some common, well-known JavaScript things like Google Analytics, which you wouldn't even want to execute because you wouldn't want to try to generate phantom visits from Googlebot into your Google Analytics.

We do have the ability to execute a large fraction of JavaScript when we need or want to. One thing to bear in mind if you are advertising via JavaScript is that you can use NoFollow on JavaScript links.

Eric Enge: If people do have ads on your site, it's still Google's wish that people NoFollow those links, correct?

Matt Cutts: Yes, absolutely. Our philosophy has not changed, and I don't expect it to change. If you are buying an ad, that's great for users, but we don't want advertisements to affect search engine rankings. For example, if your link goes to a redirect, that redirect could be blocked from robots.txt, which would make sure that we wouldn't follow that link. If you are using JavaScript, you can do a NoFollow within the JavaScript. Many, many ads use 302s, specifically because they are temporary. These ads are not meant to be permanent, so we try to process those appropriately.

Our stance has not changed on that, and in fact we might put out a call for people to report more about link spam in the coming months. We have some new tools and technology coming online with ways to tackle that. We might put out a call for some feedback on different types of link spam sometime down the road.

Eric Enge: So what if you have someone who uses a 302 on a link in an ad?

Matt Cutts: They should be fine. We typically would be able to process that and realize that it's an ad. We do a lot of stuff to try to detect ads and make sure that they don't unduly affect search engines as we are processing them.

The nice thing is that the vast majority of ad networks seem to have many different types of protection. The most common is to have a 302 redirect go through something that's blocked by robots.txt, because people typically don't want a bot trying to follow an ad, as it will certainly not convert since the bots have poor credit ratings or aren't even approved to have a credit card. You don't want it to mess with your analytics anyway.

Eric Enge: In that scenario, does the link consume link juice?

Matt Cutts: I have to go and check on that; I haven't talked to the crawling and indexing team specifically about that. That’s the sort of thing where typically the vast majority of your content is HTML, and you might have a very small amount of ad content, so it typically wouldn't be a large factor at all anyway.

Eric Enge: Thanks Matt!

Matt Cutts: Thank you Eric!

Sunday, February 28, 2010

126 Million Blogs on the Internet

JESS3 / The State of The Internet from Jesse Thomas on Vimeo.

Tuesday, January 26, 2010

SEO 101 - Part 2: Everything You Need to Know About Title Tags

The following series is pulled from a presentation Stoney deGeyter gave to a group of beauty bloggers hosted by L'Oreal in New York. Most of the presentation is geared toward how to make a blog more search engine and user-friendly, however I will expand many of the concepts here to include tips and strategies for sites selling products or services across all industries.

On-Page Optimization

A website can do just fine online without SEO. PPC, social media and other properly implemented off-line marketing efforts can really help a site succeed online with little or no SEO. But unless and until you begin to SEO your site it will always under perform, never quite reaching its fullest potential. Without SEO, you'll always be missing out on a great deal of targeted traffic that the other avenues cannot make up for.

So where do we start? SEO can be so broad and vast that we often don't know where we should begin, what will give us the greatest impact, and how to move forward. That's what I hope to answer here.

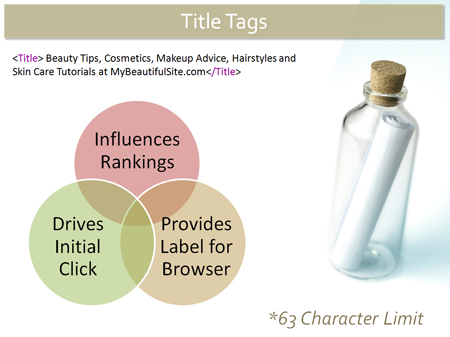

Building Good Title Tags

The title tag is the single most important piece of SEO real estate on your site. A title tag can be as long as you want, but you only have about 63 characters before the search engines cut it off. So use it wisely.

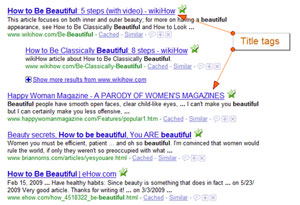

Since the title appears as the clickable link in the SERPs pages it has to be able to meet a couple of different demands.

Keyword rich

Searchers type in specific words into the search engines and they expect the engines to provide results that match their original query. We know that the search engines look at over 200 different signals to determine the relevance of any page against the keyword searched. The title tag one of them, but a very key one at that. You don't necessarily need your keyword in the title tag for it to come up in the search results, but it helps a great deal.

But what about the visitor? What does the searcher see? Let say a searcher types "how to be beautiful" into the search engine and two results are displayed. One reads "How to Look Good and Feel Great" and another reads "How to Look Beautiful Even When you Don't Feel Like It." Which of these two is more likely to be clicked by the visitor?

But what about the visitor? What does the searcher see? Let say a searcher types "how to be beautiful" into the search engine and two results are displayed. One reads "How to Look Good and Feel Great" and another reads "How to Look Beautiful Even When you Don't Feel Like It." Which of these two is more likely to be clicked by the visitor?

It's entirely likely both pages address the same concerns, but only one uses the searched keyword. More than likely, the second result will get far more clicks than the first, even if it is in a lower position in the results (which isn't likely, but lets pretend anyway.)

Compelling

The next thing your title tag needs to be is compelling. We looked out how to make it more likely to be clicked simply by putting keywords in it, but that itself is only part of the issue. Going back to our example above if we put the first non-keyword using headline up against a third keyword rich headline of "Sexy and Beautiful, Today's Hottest Stars." which do you think will gain more clicks? My guess is the first one that doesn't use keywords because it is far more compelling and speaks more toward the searcher's intent. So in this situation the third headline is likely to rank higher but will receive fewer clicks.

The trick is to make sure that the title tag is both keyword rich and compelling. This will help move your site to the top of the rankings, but also ensure that visitors are more likely to click on it into your site.

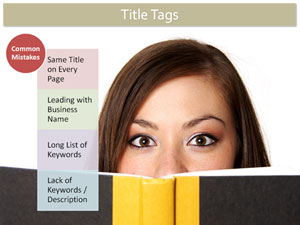

Common mistakes

Implementing your title tags properly is crucial to ensuring they are effective. There are a number of easy mistakes that you can make if you don't take the time to do it right. It's easy to want to blast through your title tags, especially if you have a lot of pages. But because the title tag is so important, you want to take care in developing them properly. Here are a few common issues:

Implementing your title tags properly is crucial to ensuring they are effective. There are a number of easy mistakes that you can make if you don't take the time to do it right. It's easy to want to blast through your title tags, especially if you have a lot of pages. But because the title tag is so important, you want to take care in developing them properly. Here are a few common issues:

Same on Every Page: Each page in your site is unique, or at least it should be. This means your title tags should be unique on each page as well. On a lot of sites you'll see the same title tag across all the pages "Welcome to My Site, or something like that. That hardly describes the page at all. And show that in the search results, you're not likely to get any clicks. Go through the site and customize each title, ensuring it uniquely and accurately describes the content of the page.

Leading with Business Name: There are good reasons to have your business name present in your title tag, but that should not be by default. If you use your business name be sure to think through the reasoning and make sure it's sound. The limitations of the title tag make using your business name something you do only with great care and consideration. I'll discuss this more in a bit.

List of Keywords: Wanting to get your keywords in the title tag makes it tempting to just try to throw as many in there as you possible can. "Beauty | Makeup | Makeovers | Diet | Healthy Skin." Sure that gets all your keywords in there but does nothing to make someone want to click on the result. This means that (gasp!) you have to use keywords sparingly so you can also make the title something worth clicking on.

Lack of Description: Aside from getting your primary keywords in the title, and making it compelling, you also have to make sure the title tag provides enough of a description of the content to ensure it gets a targeted click. No sense having someone click into the site only to find the information on the page isn't what they expected. Make sure that the title describes the content in a compelling and keyword friendly way.

Branded titles

So let's address using your business name in your title tags. As I said earlier, sometimes its wise but that shouldn't be the default position.

So let's address using your business name in your title tags. As I said earlier, sometimes its wise but that shouldn't be the default position.

In general, you can place your business name either at the front or the rear of the title tag. My rule of thumb is that you don't want to put your business name at the front of your title tag unless you have a highly recognizable brand name that the visitor will know and will likely be a click-generator from the search results. If that's not the case then you simply don't want to give up that real estate.

Branding at the rear of the title tag is a far better solution for most businesses. This helps moderately known or even unknown companies build brand name recognition. The downside of branding your title tags this way is you are still using up valuable real estate that might otherwise be used making a keyword rich and compelling headline. Also note, that if the title goes too long, your business name will be cut off in the search results.

Most of the time you don't need your business name in your title tags at all, however there is one time when I would suggest leaving it off almost 90% of the time. This is on product pages. It's so crucial to get important product data into the title tag that there often simply isn't room enough for your business name. Again, I might make an exception for well-known business names, but default to showing product info first and foremost.

Read the SEO 101 Part 1.

Sunday, January 24, 2010

Answer highlighting in Google search results

The feature is meant for searches with factual answers. If the pages returned for these queries contain a simple answer, the search snippet will more often include the relevant text and bold it for easy reference.

Saturday, January 23, 2010

Events Rich Snippet Format from Google

Last Friday, GWC has announced a new rich snippet for "events".

Events markup is based off of the hCalendar microformat. Here's an example of what the new events Rich Snippets will look like:

The new format shows links to specific events on the page along with dates and locations. It provides a fast and convenient way for users to determine if a page has events they may be interested in.

If you have event listings on your site, we encourage you to review the events documentation we've prepared to help you get started. Please note, however, that marking up your content is not a guarantee that Rich Snippets will show for your site. Just as we did for previous formats, we will take a gradual approach to incorporating the new event snippets to ensure a great user experience along the way.

Tuesday, January 19, 2010

Understanding Google Synonyms search results

Enabling computers to understand language remains one of the hardest problems in artificial intelligence. The goal of a search engine is to return the best results for your search, and understanding language is crucial to returning the best results. A key part of this is our system for understanding synonyms.

What is a synonym? An obvious example is that "pictures" and "photos" mean the same thing in most circumstances. If you search for [pictures developed with coffee] to see how to develop photographs using coffee grinds as a developing agent, Google must understand that even if a page says "photos" and not "pictures," it's still relevant to the search. While even a small child can identify synonyms like pictures/photos, getting a computer program to understand synonyms is enormously difficult, and we're very proud of the system we've developed at Google.

Our synonyms system is the result of more than five years of research within our web search ranking team. We constantly monitor the quality of the system, but recently we made a special effort to analyze synonyms impact and quality. Most of the time, you probably don't notice when your search involves synonyms, because it happens behind the scenes. However, our measurements show that synonyms affect 70 percent of user searches across the more than 100 languages Google supports. We took a set of these queries and analyzed how precise the synonyms were, and were happy with the results: For every 50 queries where synonyms significantly improved the search results, we had only one truly bad synonym.